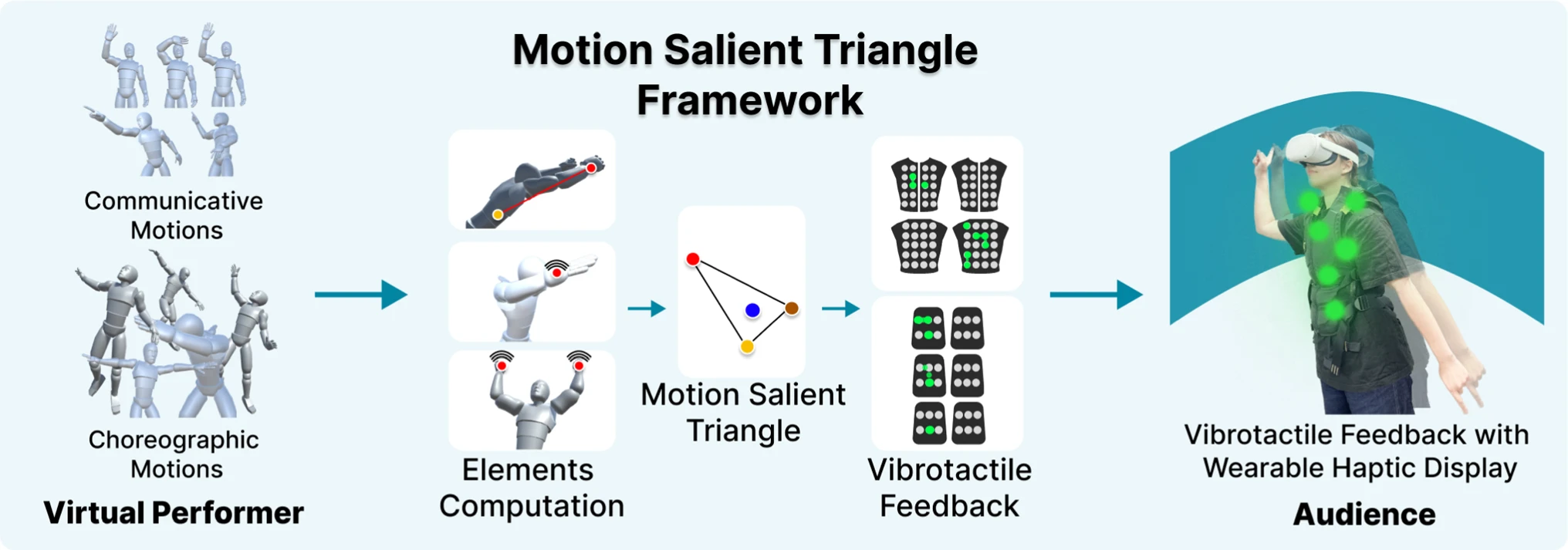

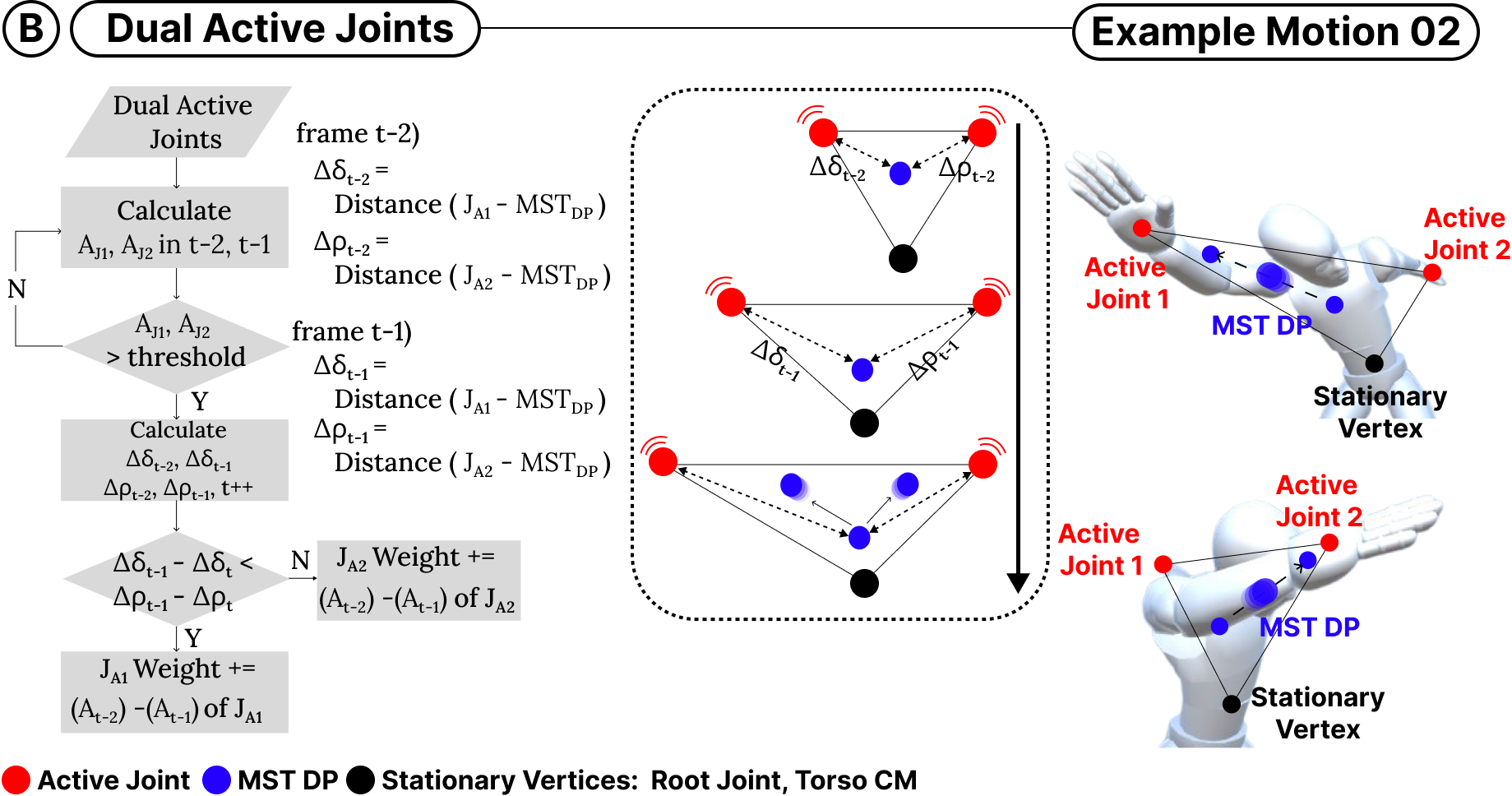

We present a novel haptic rendering framework that translates the performer’s motions into wearable vibrotactile feedback for an immersive virtual reality (VR) performance experience. Here, we employ a rendering pipeline that extracts meaningful vibrotactile parameters including intensity and location. We compute these parameters from the performer’s upper-body movements which play a significant role in a dance performance. Therefore, we customize a haptic vest and sleeves to support vibrotactile feedback on the frontal and back parts of the torso and shoulders as well. To capture essential movements from the VR performance, we propose a method called motion salient triangle (MST). MST utilizes key skeleton joints’ movements to compute the associated haptic parameters. Our method supports translating both choreographic and communicative motions into vibrotactile feedback. Through a series of user studies, we validate the user preference for our method compared to the conventional motion-to-tactile and audio-to-tactile methods.

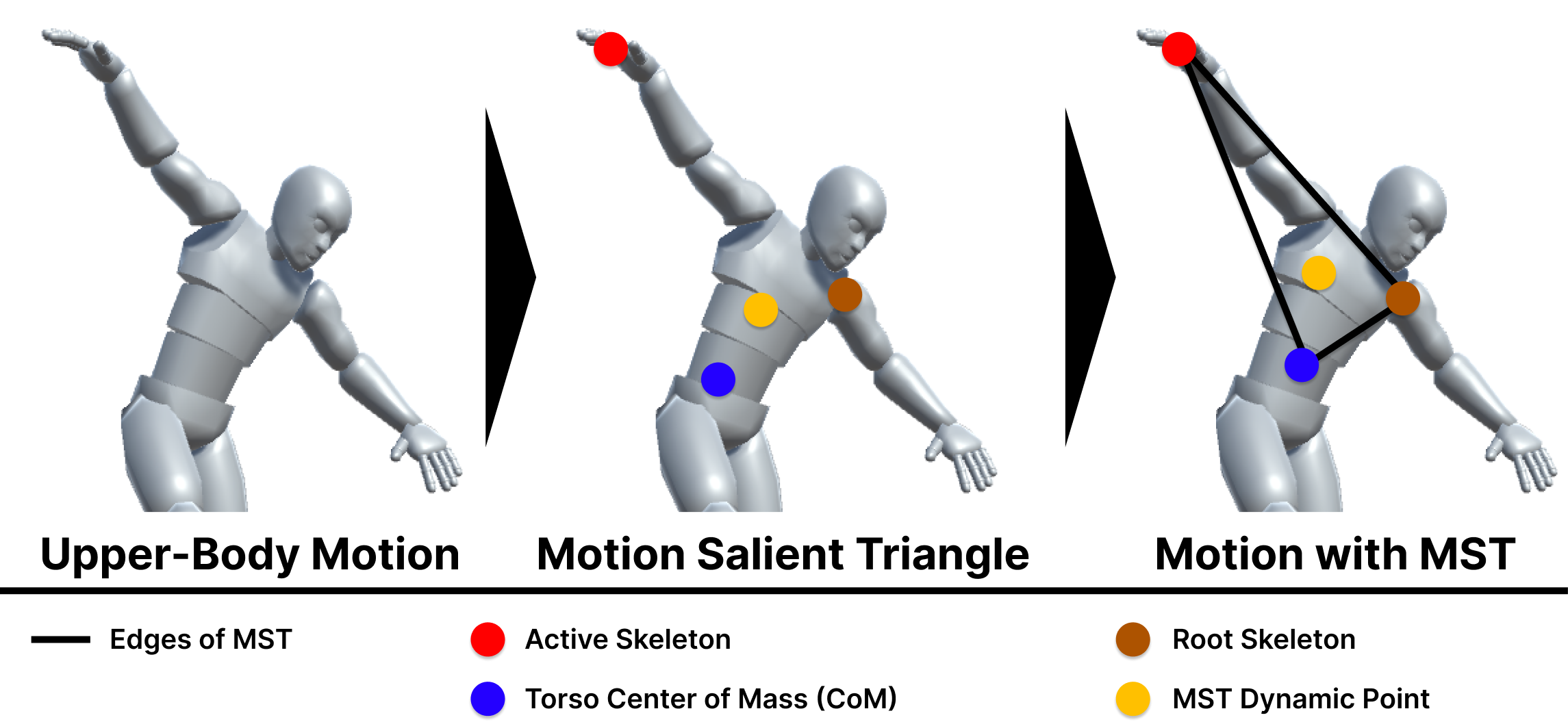

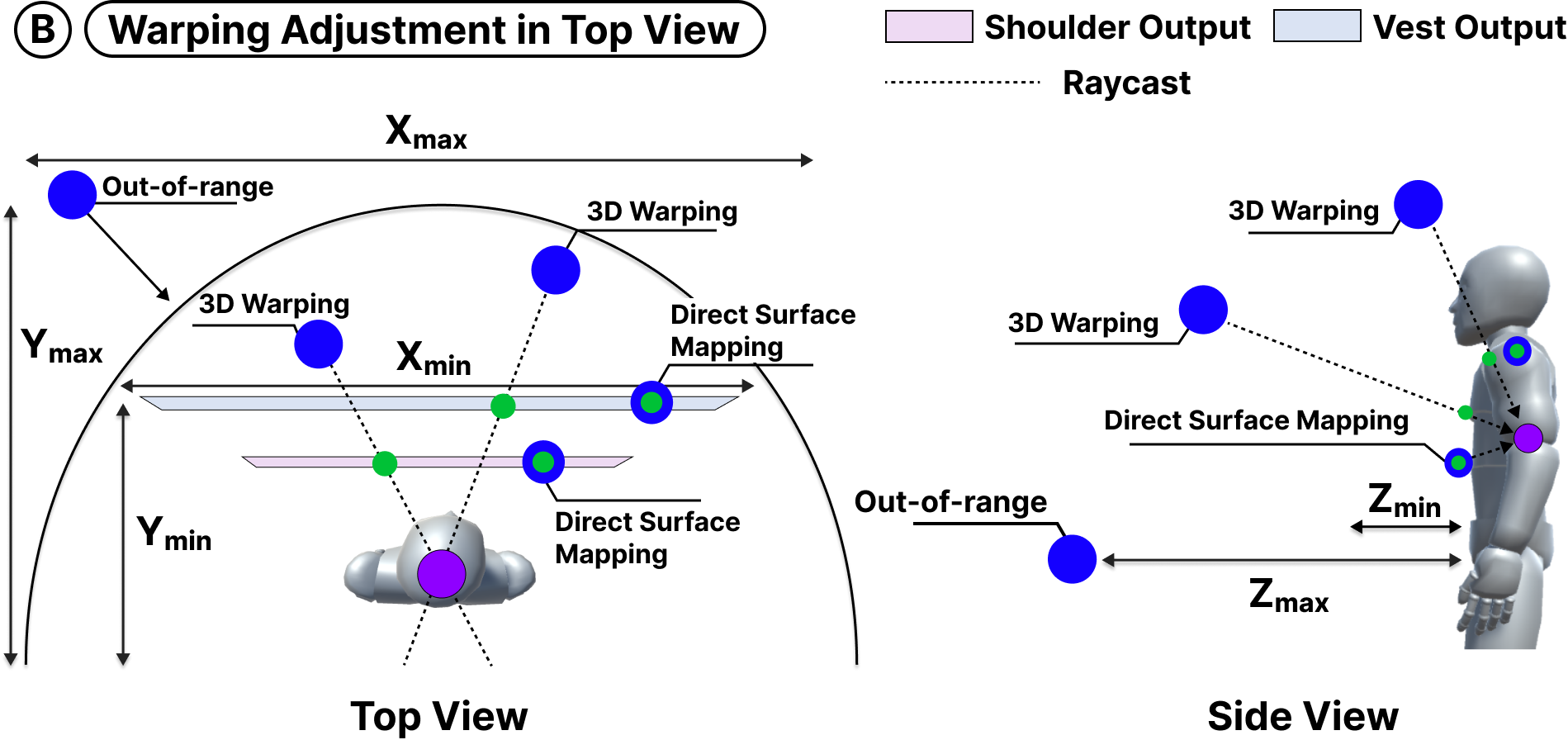

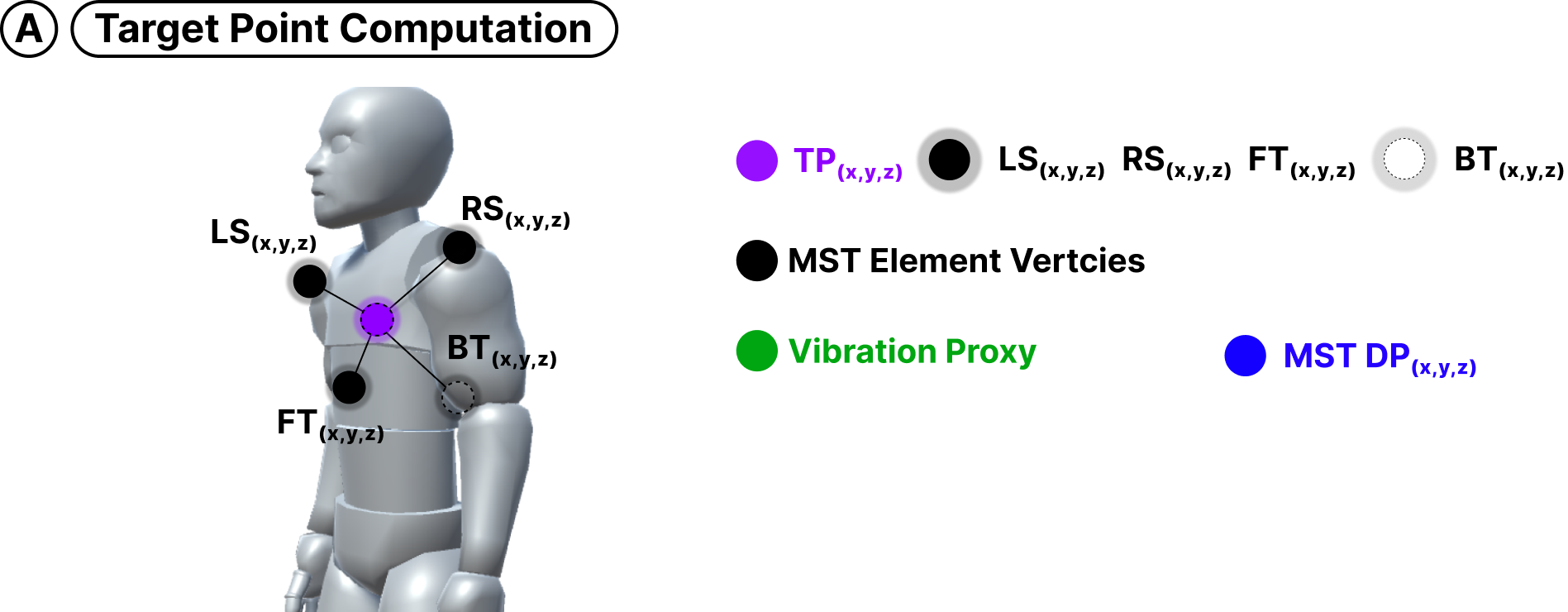

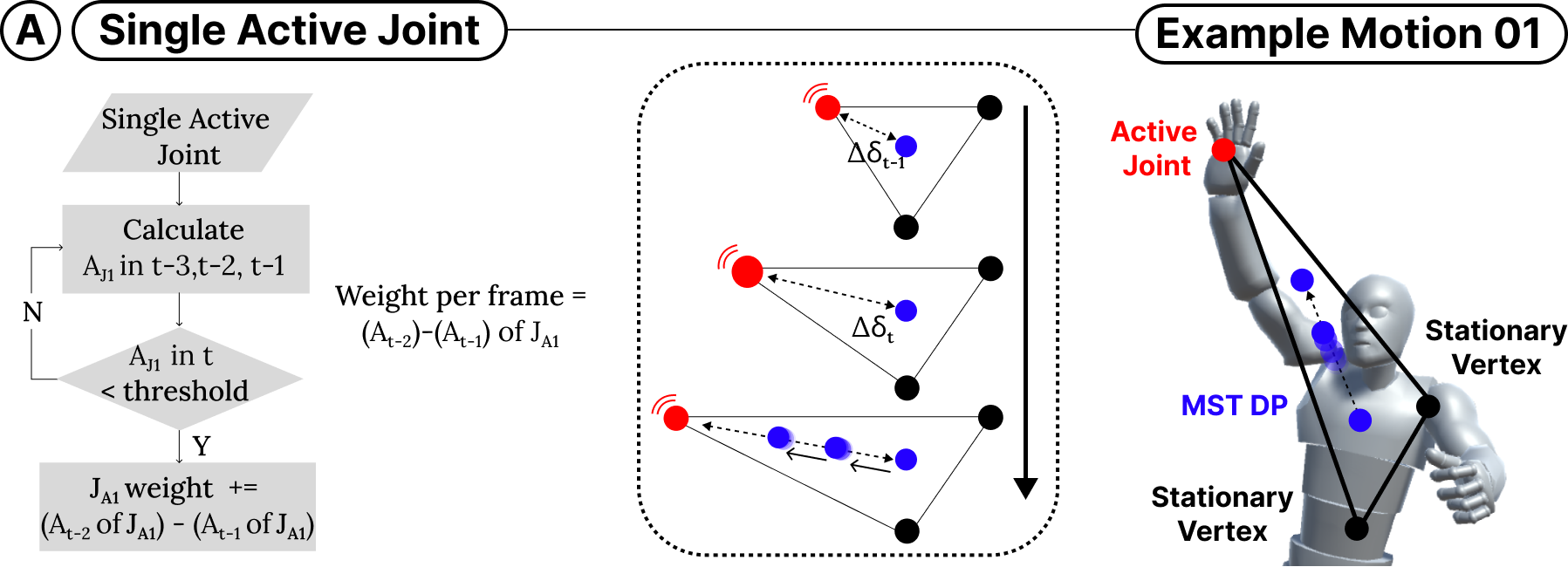

Previous researchers introduced a haptic effect using an RGB-image-based visual saliency map that works with audio data. In our case, we add 3D motion data to accommodate every movement of the performer into a meaningful haptic effect. We propose a motion salient triangle (MST) that aims to effectively translate characteristics of movements into vibrotactile haptic feedback. In this section, we describe our novel rendering design approach using the proposed MST. Our rendering approach using MST processes spatiotemporal parameters extracted from three-dimensional (3D) joint coordinates.

@article{jung2024hapmotion,

title={HapMotion: motion-to-tactile framework with wearable haptic devices for immersive VR performance experience},

author={Jung, Kyungeun and Kim, Sangpil and Oh, Seungjae and Yoon, Sang Ho},

journal={Virtual Reality},

volume={28},

number={1},

pages={13},

year={2024},

publisher={Springer}

}